- $19.00 on Teachable

Course overview

How programming languages work under the hood? What’s the difference between compiler and interpreter? What is a virtual machine, and JIT-compiler? And what about the difference between functional and imperative programming?

There are so many questions when it comes to implementing a programming language!

The problem with “compiler classes” in school is such classes are usually presented as some “hardcore rocket science” which is only for advanced engineers.

Moreover, classic compiler books start from the least significant topic, such as Lexical analysis, going straight down to the theoretical aspects of formal grammars. And by the time of implementing the first Tokenizer module, students simply lose an interest to the topic, not having a chance to actually start implementing a programing language itself. And all this is spread to a whole semester of messing with tokenizers and BNF grammars, without understanding an actual semantics of programming languages.

I believe we should be able to build and understand a full programming language semantics, end-to-end, in 4-6 hours — with a content going straight to the point, showed in live coding sessions as pair-programming and described in a comprehensible way.

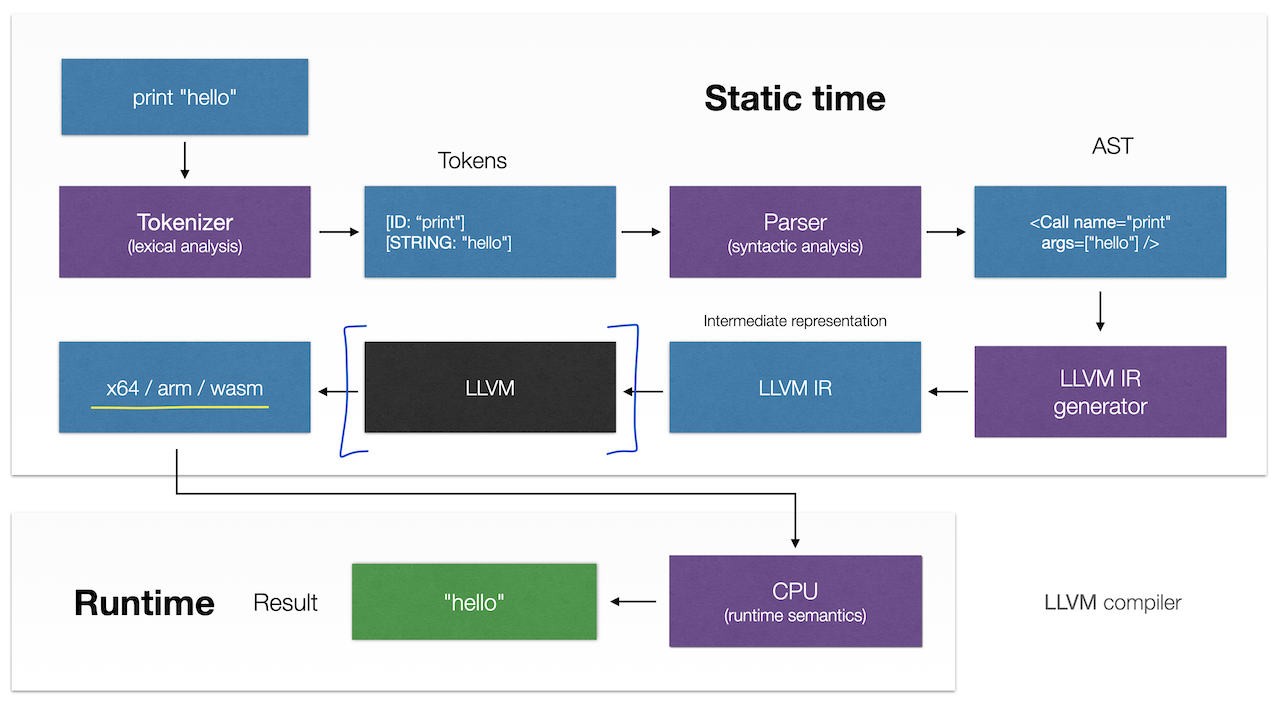

In the Programming Language with LLVM class we focus on compiling our language to LLVM IR, and build a lower-level programming language. Working closely with the LLVM compiler infrastructure level you will understand how lower-level compilation and production-level languages, such as C++, Rust, etc work today.

Implementing a programing language would also make your practical level in other programming languages more professional.

How to?

You can watch preview lectures, and also enroll to the full course, covering implementation of an interpreter from scratch, in animated and live-annotated format. See details below what is in the course.

Promo coupons:

|

Prerequisites

There are three prerequisites for this class.

The Programming Language with LLVM course is a natural extension for the previous classes — Building an Interpreter from scratch (aka Essentials of Interpretation), where we build also a full programming language, but at a higher, AST-level, and also Building a Virtual Machine. Unless you already have understanding of how programming languages work at this level, i.e. what eval, a closure, a scope chain, environments, and other constructs are — you have to take the interpreters class as a prerequisite.

Also, going to lower (bitcode/IR) level where production languages live, we need to have basic C++ experience. This class however is not about C++, so we use just very basic (and transferrable) to other languages constructs.

Watch the introduction video for the details.

Who this class is for?

This class is for any curious engineer, who would like to gain skills of building complex systems (and building a programming language is an advanced engineering task!), and obtain a transferable knowledge for building such systems.

If you are interested specifically in LLVM, its compiler infrastructure, and how to build your own langauge, then this class is also for you.

What is used for implementation?

Since lower-level compilers are about performance, they are usually implemented in a low-level language such as C or C++. This is exactly what we use as well, however mainly basic features from C++, not distracting to C++ specifics. The code should be easily convertible and portable to any other language, e.g. to Rust or even higher-level languages such as Python. Using C++ also makes it easier implementing further JIT-compiler.

Note: we want our students to actually follow, understand and implement every detail of the LLVM compiler themselves, instead of just copy-pasting from final solution. Even though the full source code for the language is presented in the video lectures, the code repository for the project contains /* Implement here */ assignments, which students have to solve.

What’s specific in this class?

The main features of these lectures are:

- Concise and straight to the point. Each lecture is self-sufficient, concise, and describes information directly related to the topic, not distracting on unrelated materials or talks.

- Animated presentation combined with live-editing notes. This makes understanding of the topics easier, and shows how the object structures are connected. Static slides simply don’t work for a complex content.

- Live coding session end-to-end with assignments. The full source code, starting from scratch, and up to the very end is presented in the video lectures

What is in the course?

The course is divided into four parts, in total of 20 lectures, and many sub-topics in each lecture. Below is the table of contents and curriculum.

Part 1: LLVM Basic constructs

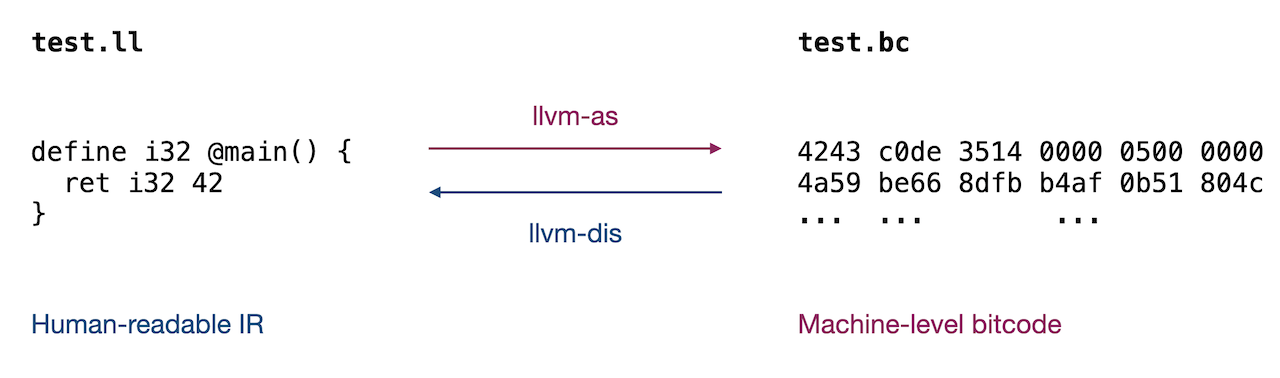

In this part we describe compilation and interpretation pipeline, starting building our language. Topics of LLVM IR, object, compilation of the bitcode are discussed.

- Lecture 1: Introduction to LLVM IR and tools

- Introduction to LLVM tools

- Parsing pipeline

- LLVM Compiler

- LLVM Interpreter

- Clang

- Structure of LLVM IR

- Entry point: @main function

- LLVM Assembler

- LLVM Disassembler

- Native x86-x64 code

- Lecture 2: LLVM program structure | Module

- LLVM program structure

- Module container

- Context object

- IR Builder

- Emitting IR to file

- Main executable

- Lecture 3: Basic numbers | Main function

- Main function

- Entry point

- Basic blocks

- Terminator instructions

- Branch instructions

- Function types

- Cast instruction

- Return value

- Compiling script

- Lecture 4: Strings | Printf operator

- Strings

- Global variables

- Extern functions

- Function declarations

- Function calls

- Printf operator

- Lecture 5: Parsing: S-expression to AST

- S-expression

- Parsing

- BNF Grammars

- The Syntax tool

- AST nodes

- Compiling expressions

- Lecture 6: Symbols | Global variables

- Boolean values

- Variable declarations

- Variable initializers

- Global variables

- Register and Stack variables

- Variable access

- Lecture 7: Blocks | Environments

- Blocks: groups of expressions

- Blocks: local scope

- Environment

- Scope chain

- Variable lookup

- The Global Environment

Part 2: Functions and Stack

In this part we implement control flow structures such as if expressions and while loops, talk about stack variables, nested blocks and local variables, and also compile functions.

- Lecture 8: Local variables | Stack allocation

- Type annotations

- Block environment

- Stack allocation

- Static single-assignment (SSA)

- Assignment expression

- Lecture 9: Binary expressions | Comparison operators

- Binary expressions

- Comparison operators

- Boolean values

- Lecture 10: Control flow: If expressions | While loops

- Conditional branch

- Unconditional branch

- Terminator instructions

- Nested If-expressions

- Phi-nodes

- While loops

- Lecture 11: Function declarations | Call expression

- Function declarations

- Parameter types

- Return type

- Function calls

Part 3: Object-oriented programming

In this part we implement OOP concepts, such as classes, instances, talk about heap allocation, and garbage collectors.

- Lecture 12: Introduction to Classes | Struct types

- Class declarations

- LLVM Struct type

- GEP instruction

- Aggregate types

- Field address calculation

- Property access

- LLVM class example

- Lecture 13: Compiling Classes

- Class declaration

- Class info structure

- Class methods

- Self parameter

- Lecture 14: Instances | Heap allocation

- New operator

- Stack allocation

- Heap allocation

- Extern malloc function

- Garbage Collection

- Mark-Sweep, libgc

- Lecture 15: Property access

- Prop instruction

- Field index

- Struct GEP instruction

- Getters | Setters

- Load-Store architecture

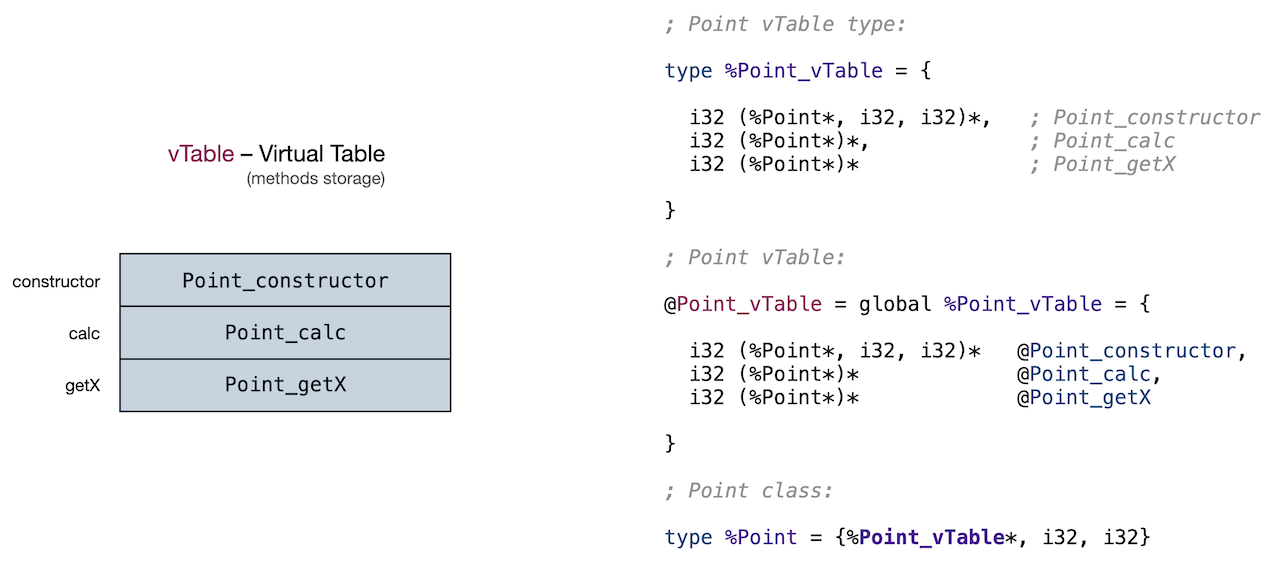

- Lecture 16: Class Inheritance | vTable

- Class inheritance

- Virtual Table (vTable)

- Methods storage

- Dynamic dispatch

- Generic methods

- Lecture 17: Methods application

- Method load

- Method call

- Arguments casting

- vTable storage

Part 4: Higher-order functions

In this part we focus on closures, functional programming, lambda functions, and implement the final executable.

- Lecture 18: Functors – callable objects

- Callable objects

- Functors

- First class functions

- __call__ method

- Callback functions

- Syntactic sugar

- Lecture 19: Closures, Cells, and Lambda expressions

- Inner functions

- Free variables

- Lambda lifting

- Escape functions

- Shared Cells

- Closures: Functors + Cells

- Scope analysis and transform

- Syntactic sugar

- Lecture 20: Final executable | Next steps

- eva-llvm executable

- Compiling expressions

- Compiling files

- Optimizing compiler

- LLVM Backend pipeline

- Next steps

I hope you’ll enjoy the class and will be glad to discuss any questions and suggestion in comments.

Any plans for an MLIR based compiler series?

@Bob, yes, there might be an extension coming up for MLIR and LLVM backend, depending on the demand.

+1 on the MLIR compiler series. I’d buy it in a heartbeat.