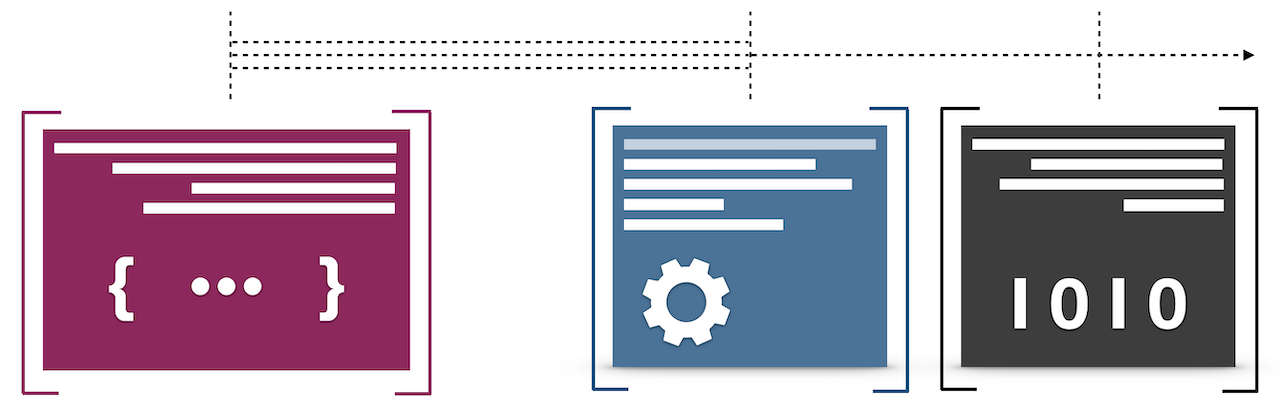

Many books on engineering a compiler usually start from the topic of Lexical Analysis, going deeper into formalism of Automata Theory. Having stuck in formal state machines semantics, students may lose interest in building programming languages, attributing them to “compiler engineers” only. I believe it is always good to start with a language runtime, understanding a bigger picture of its semantics, and only after that to go down and deeper into theoretical aspects.

In this note we cover the courses from the Programming Languages Design and Implementation series, and tell in which order to take them for the best practical outcomes.

Courses in this series are divided into “… from scratch”, and also “… Theory” and “… Algorithms” parts. We recommend starting from the practical “from scratch” classes, and go to the theory after that.

Note: you may also find all courses combined in the single Programming Languages: Ultimate – 3rd Edition bundle.

Building an Interpreter from scratch

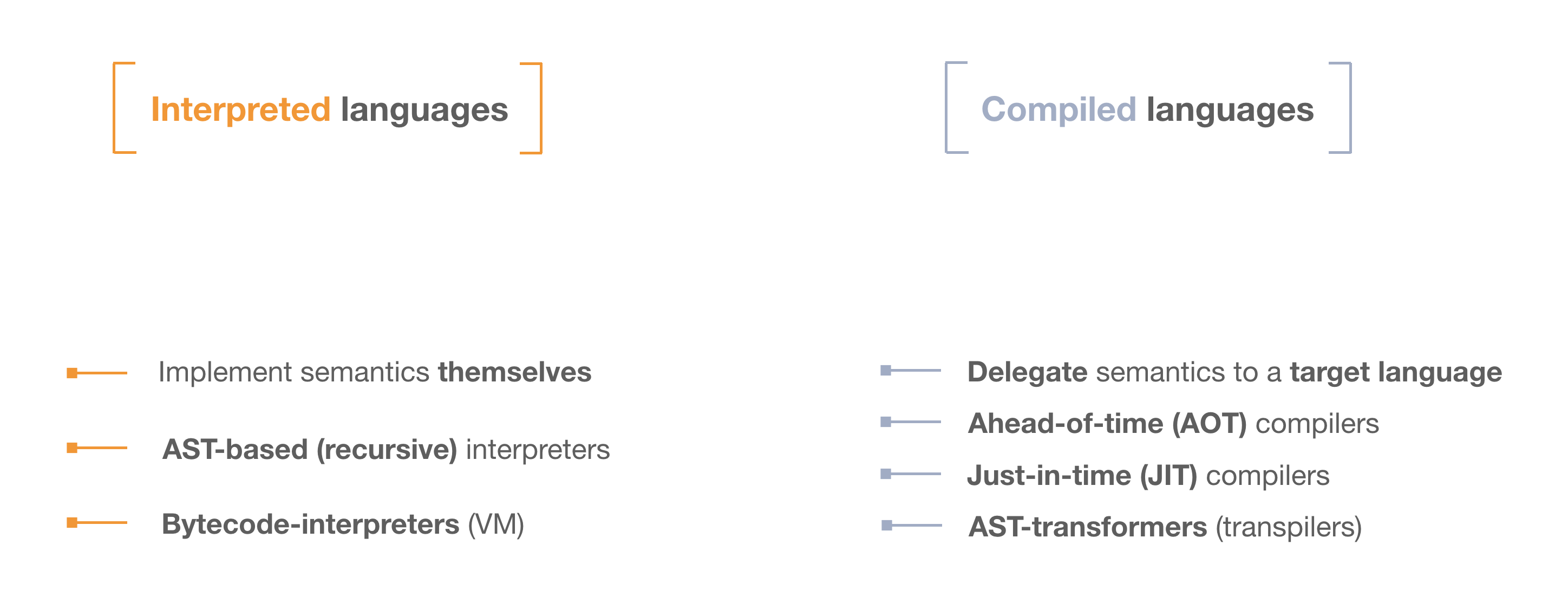

In this course we start our journey into the interesting world of programming languages, talking about different parsing and transformation pipelines, VMs, and eventually implement an AST interpreter, from scratch.

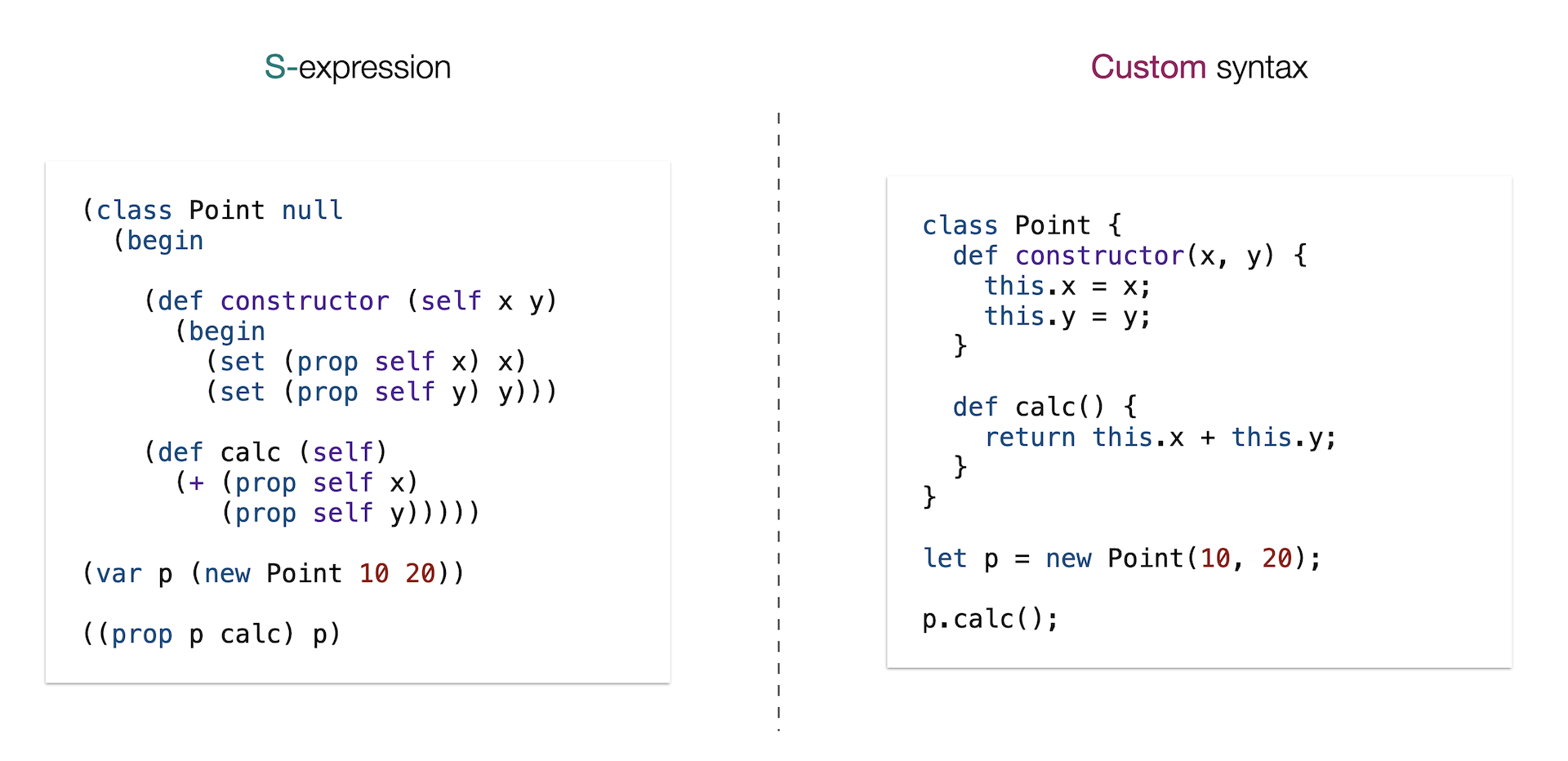

To skip the parsing stage altogether and focus specifically on the runtime (that is, the actual semantics of a programming language), we use S-expression format for the lightweight syntax. We implement the interpreter in one of the most popular programming languages, JavaScript — and the semantics of our language — Eva — is very inspired by the JavaScript itself, Python, and other dynamic PLs.

By the end of this course students learn how to build own programming language from scratch at higher (AST) level. You will learn and understand how closures work, what is an activation record and lexical environment, how to call a function, and add support for OOP.

You may find more info and enroll into the course on the class page.

Building a Transpiler from scratch

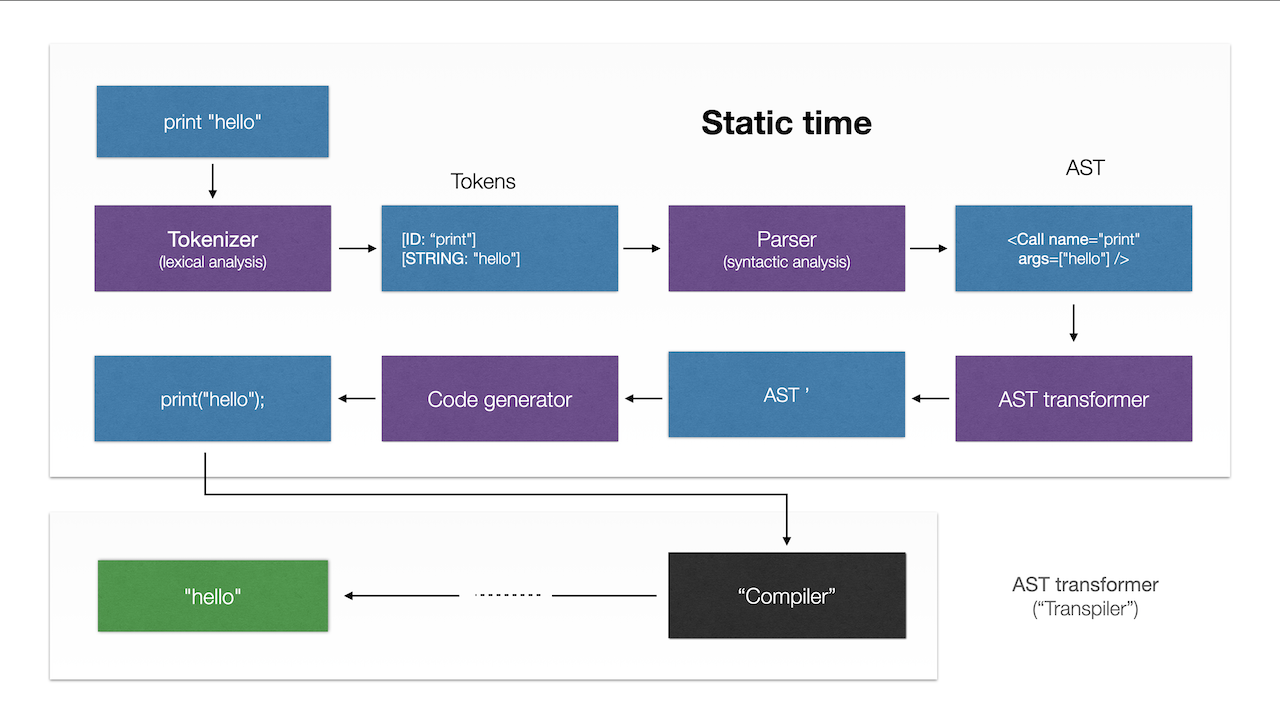

Having implemented the interpreter runtime manually, it’s worth exploring high-level compilation process, covered by transpilers.

In this class we build a concurrent, process-based programming language, by compiling it down to JavaScript.

By the end of this class students learn how the translation pipeline works at higher (AST) level. You will learn and understand how code generations works, understand semantics of concurrent processes, and compile your language to JS.

You may find more info and enroll into the course on the class page.

Building a Parser from scratch

Once you have a fully working runtime of a programming language, it is a good time to think about ergonomic syntax.

Yes, often syntax and runtime of a language may deeply intersect and depend on each other, however for the same runtime you may have multiple syntaxes. This also allows working with concept of syntactic sugar, i.e. inventing ergonomic syntactic constructs which delegate to the same runtime semantics.

In this second course we build a manual Recursive-descent parser, also from scratch. You will learn how to construct Abstract Syntax Tree (AST) nodes, about Tokenizer and Parsing process, and also see different AST formats. As an implementation we also use JavaScript.

Again, you may find more info and enroll into the course on the class page.

In addition, the Interpreter and the Parser classes may be taken as the Programming Language Bundle.

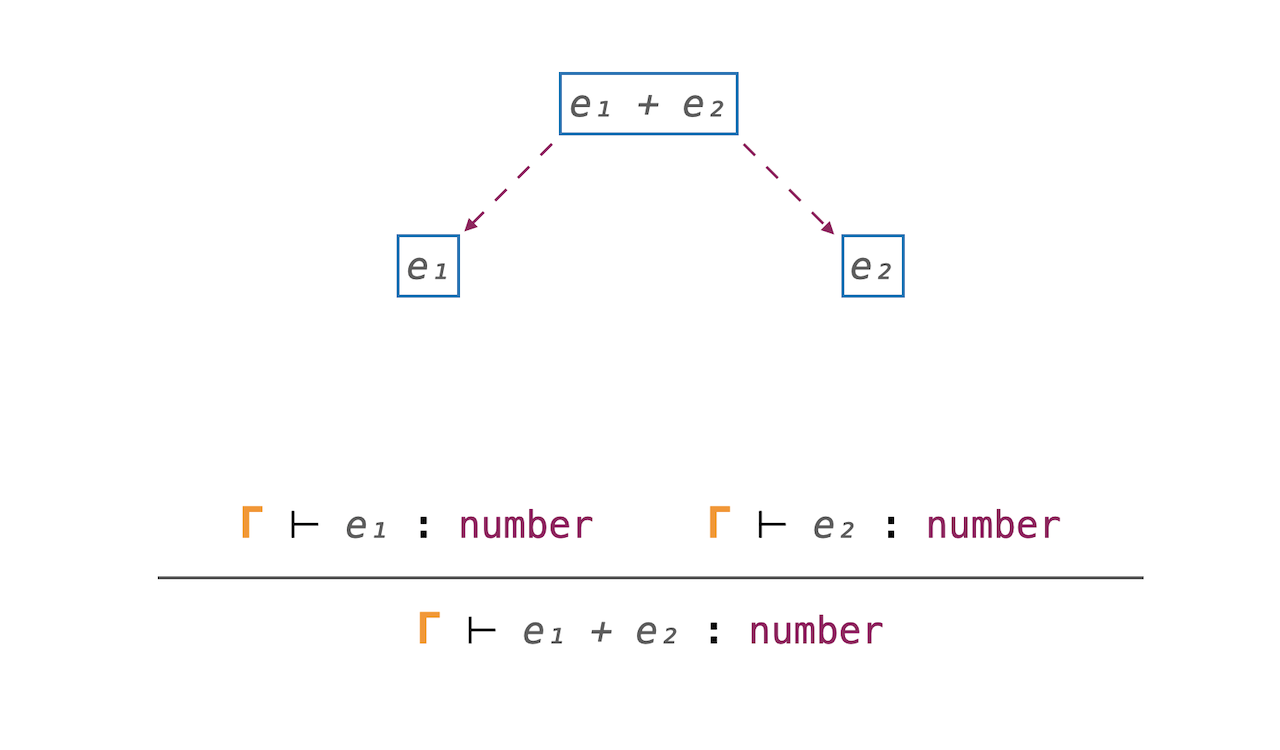

Building a Typechecker from scratch

On top of your dynamic programming language, you may consider adding a static typechecker module.

Untyped programs are often prone to errors, and may fail at runtime. Having an extra type checker may improve robustness of your programs, improve documentation and ergonomics.

In this course we build a Typechecker for the Eva programming language, also from scratch. You will learn about type systems and Type theory, see the difference between static and dynamic type checking, and get familiar with checking and inference algorithms. As an implementation we use JavaScript.

You may find more info and enroll into the course on the class page.

Building a Virtual Machine from scratch

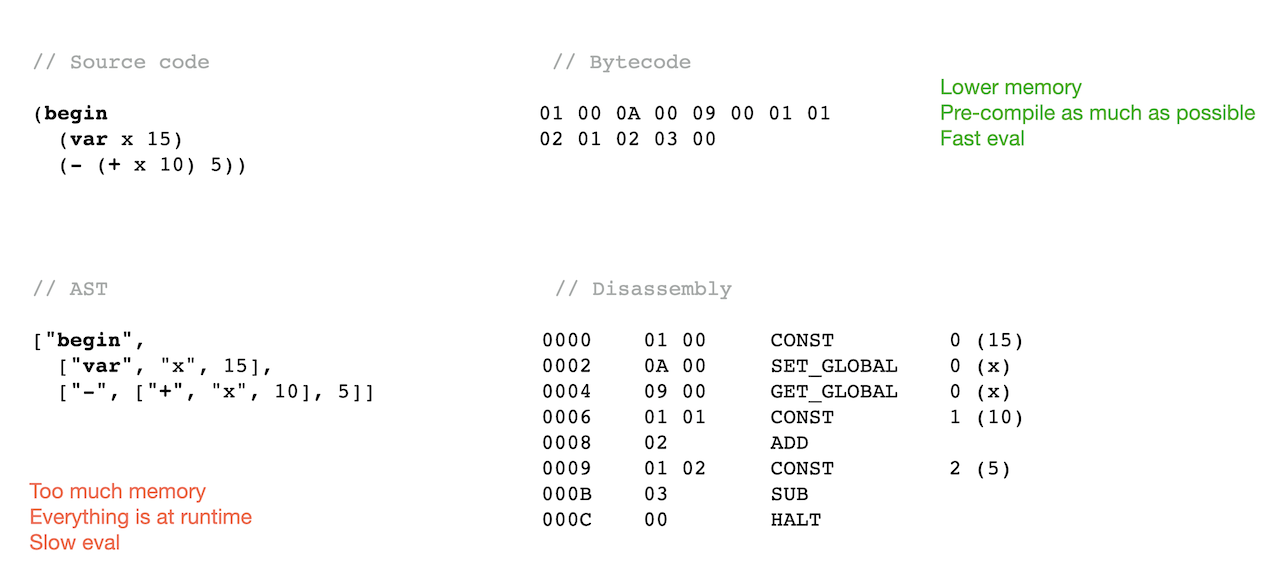

By this moment you should already have a fully working programming language with ergonomic syntax, and understand how the languages work at higher level. It is time now to go to production-level VMs, improving performance, and understanding low-level semantics.

In this course we build a Stack-based Virtual Machine, from scratch. Basic C++ experience and the Interpreters course are required as prerequisites for this class. However, we do not use too specific C++ constructs, and the code should be easily transferable to other languages.

Students will learn concepts of bytecode, compilation process, and lower-level interpretation. Concepts of stack- and heap-allocated values, and also Garbage Collection are considered. As a result we implement a VM similar in semantics to Python, JavaScript and other languages – with full support for functional programming, closures, and OOP.

You may find more info and enroll into the course on the class page.

In addition, the runtime classes can be taken as the Interpreters and Virtual Machines bundle.

Programming Language with LLVM

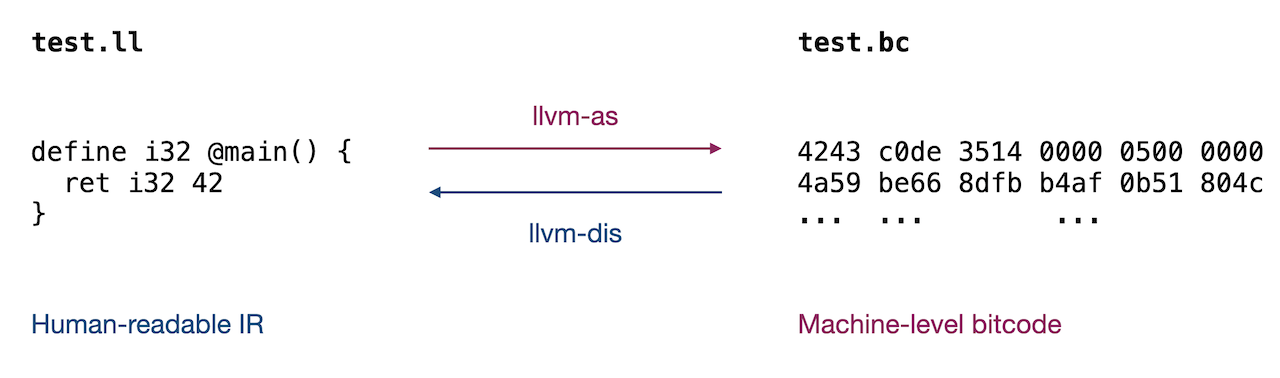

After implementing a custom low-level VM, it is worth considering using a production-level compiler infrastructure, LLVM.

In this course we compile our programming language down to LLVM IR. Basic C++ experience and the Interpreters course are required as prerequisites for this class. However, we do not use too specific C++ constructs, and the code should be easily transferable to other languages.

Students will learn concepts of LLVM frontend, IR, and lower-level compilation. Details of stack- and heap-allocated values, and also Garbage Collection are considered. As a result you will understand how Clang, Rust, and other compilers work.

You may find more info and enroll into the course on the class page.

By the end of the four practical courses you should have a fully working programming language. The next classes may be taken in any order to extend learned concepts and to deeper understand the topics.

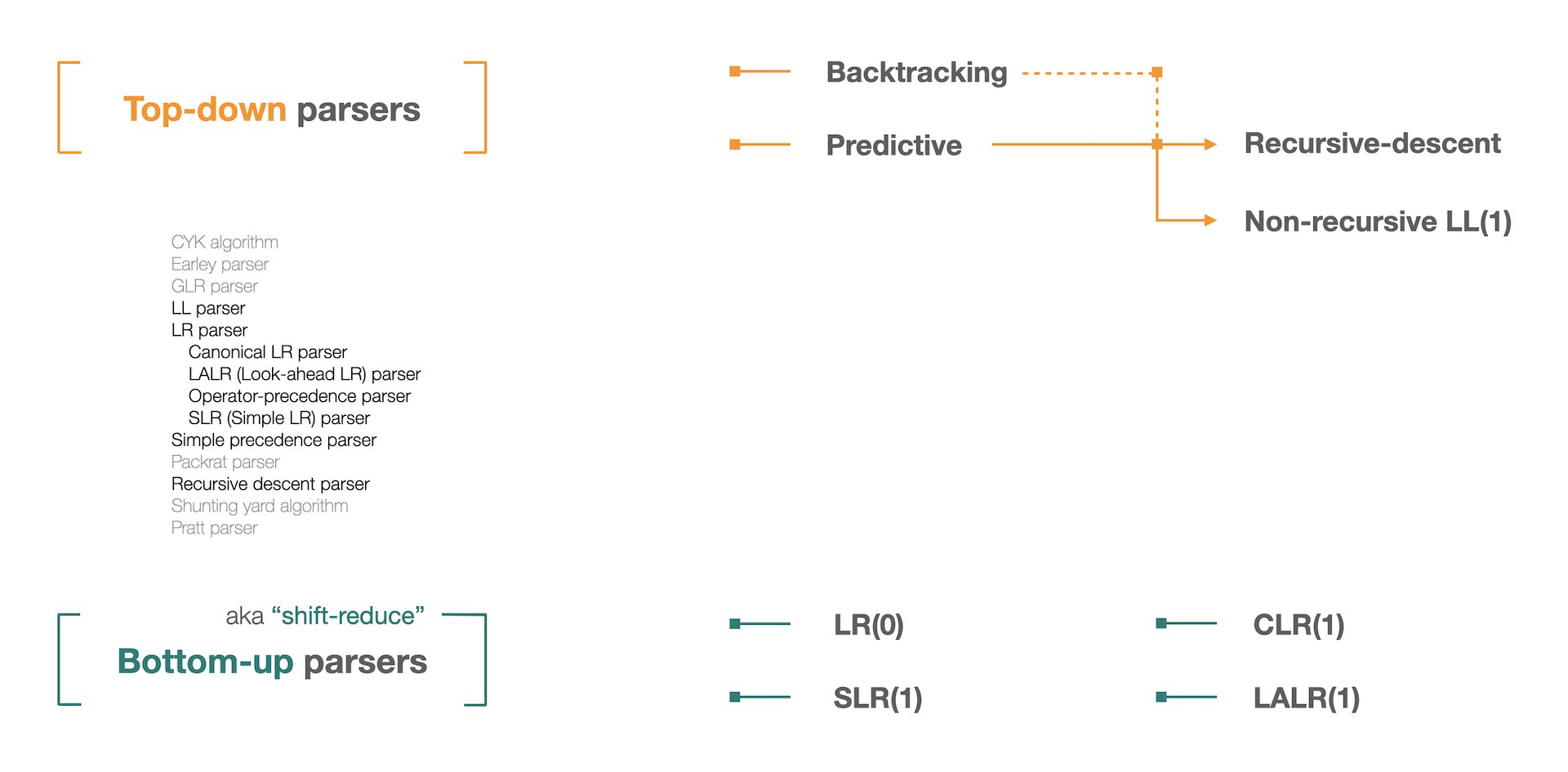

Parsing Algorithms

This course focuses on theory of parsing process, going deeper into discussions of the automated LL and LR parsers. The class combines theory and practice.

Students will learn and understand full algorithms of LL(1), LALR(1) and other parsers, and also will implement a parser for a full programming language, using parser generator tool, Syntax. For implementation we use JavaScript.

You may find more info and enroll into the course on the class page.

Garbage Collection Algorithms

In the Virtual Machines class we implemented Mark-Sweep garbage collector. It is a good time to go deeper into GC algorithms, and learn the theory and implementation strategies behind them.

Students will learn all the major GC techniques used today on practice. The class is mainly theoretical, explaining concepts of Tracing and Direct collectors, talking about Generational, Region-based collectors, Tri-color abstraction, and other topics. Specific algorithms of Mark-Sweep, Mark-Compact, Reference Counting, Copying, Immix, and G1 collectors are considered.

The course will be interesting to any curious programmer and is also recommended for professional compiler engineers.

You can find more info and enroll into the course on the class page.

Automata Theory: inside a RegExp machine

This theoretical course is devoted to the formalism of Automata, talking about State machines used to implement concept of Regular Expressions.

The concepts of NFA (Non-deterministic Finite Automata) and DFA (Deterministic Finite Automata) are considered. The modules are also used for implementing tokenizer modules in parsing process. In addition, the topic of DFA minimization is described. Implementation skeleton is presented showing how to implement a RegExp machine.

Again, you can find more info and enroll into the course on the class page.

Recommended literature

The following classic and practical books are recommended for further reading:

- “Compiler Design: Principles, Techniques and Tools” (aka “Dragon Book”), Alfred V. Aho, Monica S. Lam, Ravi Sethi, and Jeffrey D. Ullman

- “The Garbage Collection Handbook: The Art of Automatic Memory Management”, Antony Hosking, Eliot Moss, and Richard Jones

- “Parsing Techniques: A Practical Guide”, Dick Grune, Ceriel J.H. Jacobs

- “Structure and Interpretation of Computer Programs (SICP)”, Harold Abelson and Gerald Jay Sussman

- “Programming Languages: Application and Interpretation (PLAI)”, Shriram Krishnamurthi

- “Types and Programming Languages”, Benjamin C. Pierce

I hope you find the journey into the world of compilers, virtual machines, and programming languages interesting and engaging — and these courses would be a great learning material for your own programming language, which you invent.

Thanks, and see you in the class!

Sincerely,

Dmitry Soshnikov